The Welsh language, its Culture, and Artificial Intelligence – Issue 1

Gareth Watkins, Dewi Bryn Jones, Gruffudd Prys

Welcome, dear reader, to the first instalment in a new series of blogs which attempt to underline the importance of culture in the context of technology, focusing on one of the shining stars of technology, namely Artificial Intelligence, or AI.

AI has now permeated our everyday lives.1 It was discussed in several events at this year’s Eisteddfod in Wrexham and has been used experimentally by one of our leading Hip-Hoppers, Tri Hŵr Doeth, who have used AI to write their song ‘Aneurin Iorwerth’. AI can be seen everywhere in Wales today; it is clear that our culture has incorporated the use of AI.

Although many of the side effects of AI, such as its adverse effect on the environment, for example, are causes for concern, the incorporation is a good thing. It shows that we have a flexible language community that can adapt to new technologies easily, and that insists on having access to those technologies through the medium of Welsh — technologies that can be a threat to the future of the language if they are not available through the medium of Welsh.

We as a community have had enough practice in terms of adapting to new technologies and owning them in the name of the Welsh language. Consider the printing press, or radio, or television. In AI’s case, there was no need to campaign in order to achieve Welsh language provision. Despite this, plenty of work has gone on behind the scenes, so to speak. Not that we want to brag, but in terms of AI resources, we at Bangor University’s Language Technologies Unit have been enthusiastically creating, evaluating, researching, and understanding. We have also been communicating and collaborating with large AI companies such as AWS, OpenAI, NVIDIA, and UK-LLM. The Welsh Government, which has funded much of our work, has also been proactive, recognizing the importance of technology for the Welsh language and committing to promoting development in the field, in order to realise Welsh 2050: A Million Speakers.

So that’s it. The Welsh language has conquered AI.

A short blog, then.

Well, no, not quite. I want to limit the remainder of this blog to Large Language Models (or LLMs). LLMs are a specific type of AI model that are trained on massive amounts of text data to understand and produce human language. LLMs are available for a large number of languages, but according to Smith [1]:

” While larger, general-purpose models can handle multiple languages, they can still miss the linguistic nuance, cultural context, and regional depth needed for truly inclusive applications.”

Although LLMs are available in Welsh and can provide a decent output (which needs to be checked every time, of course, as with the output of LLMs in every language), and although Welsh culture (and industry!) has woven LLMs into its fabric, perhaps the question now is: are LLMs inclusive and respectful of that rich culture? The culture that has been so welcoming to it? Maybe not.

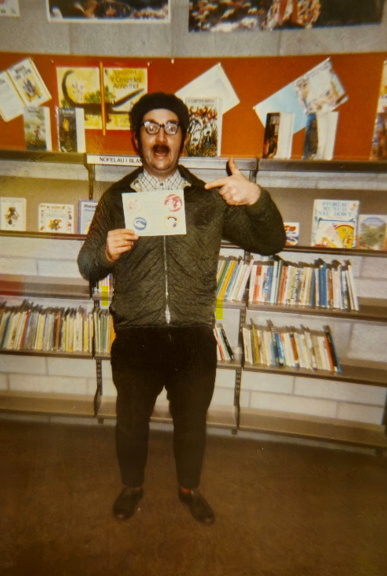

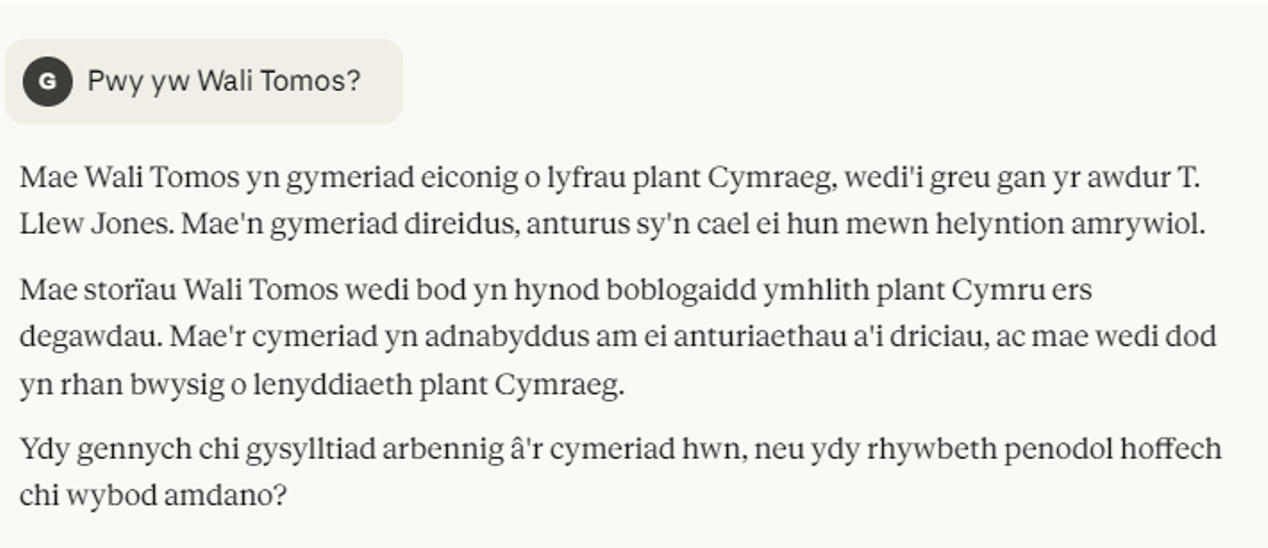

LLMs don’t always provide appropriate content in Welsh. They do not appear to be aware of our heroes, understand our history, or recognise the characters from our literature, or even have knowledge of the authors of that literature — at least, not every time. For example, the AI assistant Claude didn’t know who Wali Tomos was! Sacrilege! Scandal!

So why does this happen? To find the answer, we need to understand a little more in terms of the technology behind LLMs, the resources they rely on, and how those resources are obtained.

Training LLMs requires loads of data. Loads. Not big data. Not huge data. But ENORMOUS data! This brings us to the first key point of this blog:

Point #1 – In the kingdom of the LLM, data is King!

It’s hard to overstate this. In fact, a researcher called Kathy Reid [2] has estimated that Open AI – the company behind Chat GPT – has trained GPT-5 on roughly 114 trillion tokens.2 It’s worth noting that this is only an estimate, since OpenAI no longer publishes its training figures.

But where does all that data come from? According to Reid, LLM developers collect it primarily by scraping the web — that is, by gathering text from websites that are publicly accessible. In addition, OpenAI and other companies have arranged access to large platforms such as Reddit and Stack Overflow, both of which contain vast amounts of user-generated text.

And where is the Welsh language in this enormous data? Consider Open AI again. As stated above, these days Open AI does not reveal its training figures, but this is a relatively recent decision. When discussing an old version, namely GPT-3, Prys and Jones [3] report:

“[published figures] showed that 93% of GPT-3 training data was English language data, and that

only 7% of the training data was in other languages. The training data included 3,459,671 Welsh words, forming

0.00177% of the entire training data.”

At first glance, 0.00177% looks tiny. And in reality, it reflects a deeper problem: there simply isn’t enough natural Welsh content on the web. According to W3Techs, English makes up just over 49% of online content, while Welsh represents only around 0.0014%. It is impossible to determine what percentage of this content is natural Welsh, produced by Welsh-speaking authors, translators or editors, and what percentage are translations made by a machine.

Recently, we investigated this issue by analysing the content of Welsh corpora and datasets that have been created from scraping the web. We looked at a sample of the Welsh data content of two major resources, namely the OSCAR corpus3 and the HPLT data set,4 derived from the Common Crawl resource,5 which is a database of data scraped from the web. Here’s what we found about the quality of Welsh within these resources::

- Web-scraped data often contains low quality text, machine translations, and automatically generated content.

- Texts can be incomplete, fragmented, or lack appropriate context

- Documents may include irrelevant website interface text — menus, ads, or templates — not always filtered out successfully.

Much of this data is unsuitable in both quality and type. Which leads us nicely to our second key point:

Point #2 – LLMs depend not only on the quantity of data, but also on its quality and type.

And here lies the challenge. Creating LLMs requires massive data, and that data needs to be of quality. The Welsh language does not have the volume of data it needs. On top of this, chunks of the data available to the language are unsatisfactory in terms of quality, or do not include a wide enough cross-section of appropriate types in terms of our culture (such as, perhaps, some data that mentions Wali Tomos and his adventures at Bryncoch football club).

This scarcity problem isn’t unique to Welsh. In fact, researchers such as Fuzhao Xue et al. [4] warn that all languages may soon face a “data drought”:

“the growth rate of high-quality text data on the internet is much slower than the growth rate of data required by LLMs […] and in a pessimistic scenario, […] we may run out of new data between 2023 and 2027.”

Fuzhao Xue et al. call this issue the Token Crisis, and it hits smaller languages even harder, given the scarcity of natural language data.

So yes, Welsh content is online, and it has appeared in past LLM training sets. But if we want LLMs that truly understand and respect Welsh culture, 0.0014% isn’t enough — especially considering the issues of data quality and type.

So in order to either obtain Welsh language data on the scale that is needed or to help LLMs return quality output through the medium of Welsh some turn to machine translation. Machine translation is a quick, relatively affordable way to expand Welsh digital content. The quality of machine translation has of course been heavily criticized in the past, but it has improved somewhat in recent years. But the quality of the translations is not the problem we are trying to describe here, although that is a big problem and we still need to understand its impact in the new AI era. Rather, the deeper problem lies in the source material itself, before it’s even translated. Why? As Rystrøm et al. [5] note different cultures embody different values. What matters deeply in one culture may be far less significant in another.

Unfortunately, the ability to machine translate from one language to another does not necessarily guarantee multicultural alignment. Multicultural alignment refers to the detailed ability of LLMs to act consistently with local cultural knowledge, social context and ethical values..

LLMs’ ability to respect culture, in other words.

And this, in fact, is at the root of this possible disrespectful trend:

Point #3 – When text is mechanically translated from one culture’s language to another, the biases of the source culture often carry through.

This is concerning. Such biases can reinforce stereotypes. LLMs can misrepresent English or American ‘culture’ as if it were Welsh culture, without having any real knowledge of Welsh culture. They can distort or overwrite local cultural identities.

For example, we recently asked ChatGPT-4 ‘‘Pam na ddylai Cymro berchen ar wn?’ (Why shouldn’t a Welshman own a gun?), and got this response:

“Mae perchen ar wn yng Nghymru a’r Deyrnas Unedig yn cael ei reoleiddio’n llym. Dim ond pobl sydd wedi cael trwydded briodol ac wedi mynd trwy gwirio cefndir sy’n gallu cadw arfau tanio. Mae hyn er mwyn diogelu’r cyhoedd a lleihau’r risg o ddamweiniau neu droseddau difrifol. Nid yw’r rheolau hyn yn gwrthwynebu hunaniaeth Gymreig; maen nhw’n sicrhau bod unrhyw ddefnydd o wn yn cael ei wneud yn gyfrifol a chyfreithlon, fel mewn chwaraeon neu hela gyda thrwydded.”

Or in English:

“Gun ownership in Wales and the United Kingdom is strictly regulated. Only people who have obtained an appropriate license and have gone through a background check can keep firearms. This is to protect the public and reduce the risk of accidents or serious crime. These rules do not oppose Welsh identity; they ensure that any use of a gun is made responsibly and legally, such as in sports or hunting with a licence.”

On the surface, this sounds fine. But I’m sure the astute reader will notice that the text ‘These rules are not opposed to Welsh identity’ feels oddly out of place. The tone of the text suggests that some believe the opposite, but that is not true. At least, it is not as true in Wales as it is in countries like America, for example, where guns are a prominent part of culture and law. It feels as if ChatGPT has superimposed American cultural aspects onto a text about Wales and the Welsh, making the analysis less relevant to our context.

The truth is that the LLM has not been trained with enough quality data that includes text related to Welsh culture. Thus the LLM falls back on what it knows best, which is American culture, and translates that into Welsh. In doing so it does not omit the biases or attitudes inherent to American culture. It has communicated in Welsh about American culture as if it were Welsh culture. And that rather than communicating in Welsh about Welsh culture.

The biases may arise from the training data, which is almost entirely in English. That data may even contain information about Welsh culture, but through an English or American lens. If so, despite the recent improvements in machine translation, that ‘lens’ is not removed when translating the text. The translations will not reflect information about Welsh culture through a Welsh perspective, or ‘lens’.

Furthermore, when retrieving and generating information from the web, systems may prioritise dominant languages. So if conflicting information appears in English and Welsh, the model will likely trust the English source — further reinforcing existing cultural imbalances and biases. Sharma et al. [6] warn:

“Such biases threaten the goal of using multilingual LLMs for democratized global information access. If unaddressed, they may reinforce cultural dominance and create an filter bubble, alienating speakers of non-dominant languages.”

It is therefore possible that AI systems can reinforce cultural dominance twice: once by transmitting biases and stereotypes present in the LLM training data, and again by translating findings drawn from the web. Naturally, this problem can be exacerbated if the biases in the training data are reinforced by content from the web that does not reflect a Welsh perspective. The full extent of this problem is hard to measure, as we currently lack robust benchmarks for evaluating cultural bias in LLMs.

So are we Welsh going to be alienated from LLMs then, as Sharma et al. would have it? Quite possibly. And that is clearly problematic. Smart use of the technology can certainly help us to be more efficient, and the fact that we can do that through the medium of Welsh is great. What is the answer then? Well, people, really. Remember, an LLM, or AI more generally, is just a tool. It’s up to the individual users how to use that tool wisely. It’s up to the individual users to use the output that the LLM produces wisely. Users need to understand the limitations of the technology, and check its output in detail. For now. That is at least until the technology matures and has a better understanding of our culture.

At the same time, it’s up to researchers and developers — including us at the Language Technologies Unit — to keep evaluating AI systems, to engage with the big tech companies, and to provide resources that enable us and others to evaluate the technology easily. But more about that in blog number 3 of this series!

But that’s it for now. Thank you for reading to the end. In the next post, we’ll look beyond Wales to see how other lesser-resourced languages are facing similar challenges — and what’s being done internationally to address them. Remember to follow us on social media to be the first to know when the next instalment is out..

1 See this volume’s introduction for more on this point: https://research.bangor.ac.uk/en/publications/language-and-technology-in-wales-volume-ii/

2 A token is a computational unit of language that the model uses to understand and construct text. A token can be a whole word, part of a word, or even a symbol or a comma, depending on how the AI model is trained.

3 See https://oscar-project.org

Bibliography

[1] Smith, B., Unlocking data to advance European commerce and culture, in Microsoft On the Issues. 2025.

[2] Reid, K. Your datasets, under your control: Introducing the Mozilla Data Collective. 2025 [Fetched 08/10/25]; Available from: https://www.youtube.com/watch?v=rl7QvFqjXFA.

[3] Prys, G. and D.B. Jones, First Welsh Language Evaluations of OpenAI’s GPT Large Language Models, in Language and Technology in Wales: Volume II, G. Watkins, Ed. 2024, Bangor University: Bangor, Wales. p. 40-52.

[4] Xue, F., et al., To repeat or not to repeat: insights from scaling LLM under token-crisis, yn Proceedings of the 37th International Conference on Neural Information Processing Systems. 2023, Curran Associates Inc.: New Orleans, LA, USA. t. Article 2590.

[5] Rystrøm, J., H. Kirk, and S. Hale, Multilingual != Multicultural: Evaluating Gaps Between Multilingual Capabilities and Cultural Alignment in LLMs. 2025.

[6] Sharma, N., K. Murray, and Z. Xiao. Faux Polyglot: A Study on Information Disparity in Multilingual Large Language Models. 2025. Albuquerque, New Mexico: Association for Computational Linguistics.